Innovation excites us and unlocks business value but unless it inherently scales profitably, you could be stuck at a dead-end. This talk looks at NVDIMM-N as a cautionary tale of commercialization pitfalls that kill innovative technologies. How can you analyse a technology not just for the value it unleashes but also for its long-term commercial viability? How is CXL different? What types of CXL implementations should you consider and which should you be wary of?

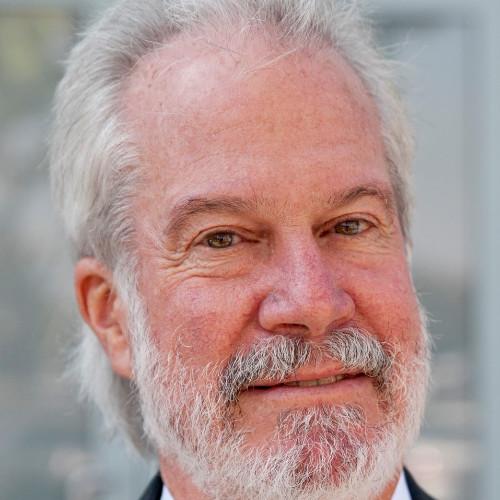

Keith Winkeler

Keith Winkeler is VP of Engineering, PowerEdge & Core Compute Platforms at Dell Technologies. Keith is an accomplished and collaborative executive with 30 years of demonstrated excellence in building and leading high performance global organizations responsible for architectural definition, development and delivery of industry leading HW/SW products. His proven strengths include talent management and development, organizational design, matrixed and global teams, ODM partner leadership and value chain management.