The leading companies at every level of the data center value chain, from IP, chips, platforms, system OEMs to hyperscalers, are coalescing around CXL technology as a path to revolutionize the data center. With workload demands increasing rapidly, the need for more memory bandwidth and capacity continues to rise. Memory is of critical importance, and its share of the server bill of materials (BoM) continues to grow. Making the best use of vital memory resources is an imperative. With CXL technology, the industry is pursuing tiered-memory solutions that can break through the memory bottleneck while at the same time delivering greater efficiency and improved TCO. Ultimately, CXL technology can support composable architectures that match the amount of compute, memory and storage in an on-demand fashion to the needs of a wide range of advanced workloads.

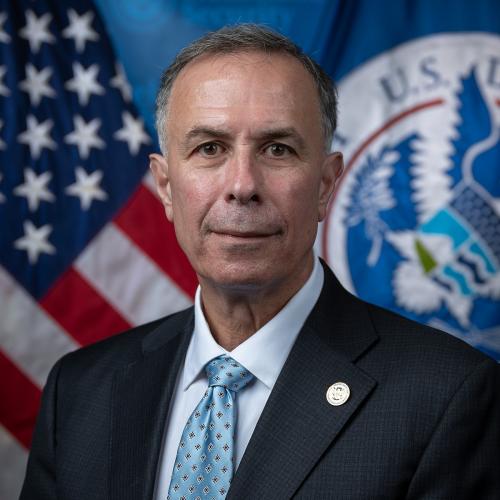

Mark Orthodoxou

Mark Orthodoxou is the Vice President of Strategic Marketing for Rambus’ Datacenter Products Group. Mark has over 25 years of experience in product management and strategic planning in the semiconductor industry across multiple technology disciplines, including enterprise storage, data center compute, memory subsystems and networking. Mark has evangelized the benefits of serial-attached memory since long before CXL was introduced as a standard and was responsible for the introduction of the first commercially available products in this space. Mark currently sits on the CXL Consortium Marketing Working Group. He has held various leadership positions at Microchip, Microsemi, PMC-Sierra, and IDT.

Rambus

Website: https://www.rambus.com/

Rambus is a provider of industry-leading chips and silicon IP making data faster and safer. With over 30 years of advanced semiconductor experience, we are a pioneer in high-performance memory subsystems that solve the bottleneck between memory and processing for data-intensive systems. Whether in the cloud, at the edge or in your hand, real-time and immersive applications depend on data throughput and integrity. Rambus products and innovations deliver the increased bandwidth, capacity and security required to meet the world’s data needs and drive ever-greater end-user experiences. For more information, visit rambus.com.