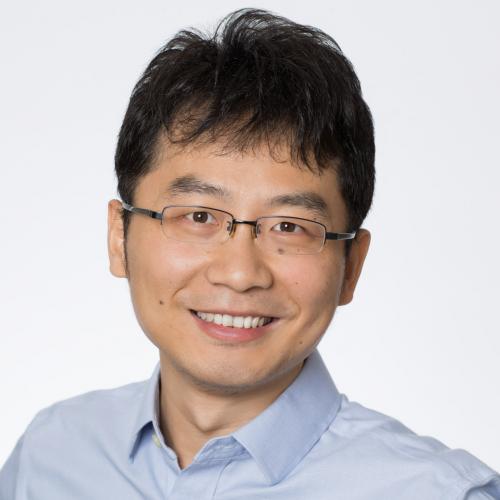

I was drawn to Rambus to focus on cutting edge computing technologies. Throughout my 15+ year career, I’ve helped invent, create and develop means of driving and extending performance in both hardware and software solutions. At Rambus, we are solving challenges that are completely new to the industry and occur as a response to deployments that are highly sophisticated and advanced.

As an inventor, I find myself approaching a challenge like a room filled with 100,000 pieces of a puzzle where it is my job to figure out how they all go together – without knowing what it is supposed to look like in the end. For me, the job of finishing the puzzle is as enjoyable as the actual process of coming up with a new, innovative solution.

For example, RDRAM®, our first mainstream memory architecture, implemented in hundreds of millions of consumer, computing and networking products from leading electronics companies including Cisco, Dell, Hitachi, HP, Intel, etc. We did a lot of novel things that required inventiveness – we pushed the envelope and created state of the art performance without making actual changes to the infrastructure.

I’m excited about the new opportunities as computing is becoming more and more pervasive in our everyday lives. With a world full of data, my job and my fellow inventors’ job will be to stay curious, maintain an inquisitive approach and create solutions that are technologically superior and that seamlessly intertwine with our daily lives.

After an inspiring work day at Rambus, I enjoy spending time with my family, being outdoors, swimming, and reading.

Education

- Ph.D., Electrical Engineering, Stanford University

- M.S. Electrical Engineering, Stanford University

- Master of Engineering, Harvey Mudd College

- B.S. Engineering, Harvey Mudd College